By: Diane Bergeron

In 1932, Walter Cannon first articulated the ‘fight or flight’ responsei, where a person either behaves aggressively or flees. Shelley Taylor, a renowned psychologist and stress researcher, questioned this limited perspective and noticed that most stress research had been conducted on males, especially male rats. Based on the overwhelming evidence that females tend to respond to stress with affiliative behaviors (e.g., seeking out and giving social support), she developed the extremely influential ‘tend and befriend’ theory to explain a third way that humans respond to stress.ii iii She also noted that, prior to 1995, women made up only 17% of participants in stress response studies.

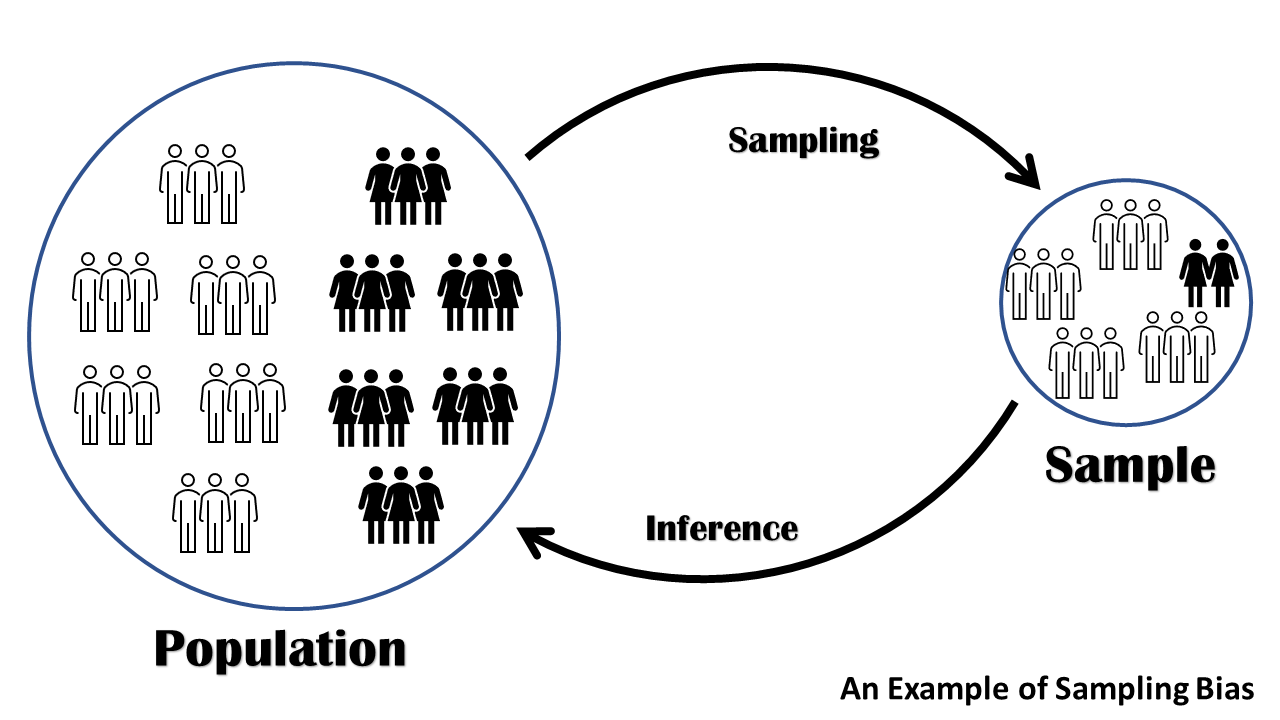

This is an example of sampling biasiv – something that researchers must actively work to mitigate when designing a study. For instance, we might ensure that our sample fairly reflects different demographic and identity variables (e.g., race, organizational rank, gender identity, etc.) such that the inferences we derive from the sample are generalizable to a larger population. We also have other safeguards during the scientific process, such as having colleagues anonymously review our papers, to help strengthen our (supposed) objectivity and ensure the application of the scientific method.

But What Happens When Bias is “Baked In”?

We collect data in order to answer questions, generate new insights, and better understand the world around us. One popular means of collecting data is surveys. Surveys are often comprised of specific measurement scales intended to capture the particular concepts or constructs we are studying (e.g., employee engagement, psychological safety, transformational leadership) as well as the relationships among these constructs (e.g., how psychological safety impacts employee voice).v

Generally, these measurement scales have been through a rigorous review process and were validated and thoroughly tested. This is particularly true when they are published in reputable journals, often resulting in a large body of accumulated evidence on the specific construct and its associated measurement scale. Once a measure becomes accepted (i.e., is commonly used in research studies), it is rarely questioned. For example, if you are studying psychological safety you are expected to use the measure of psychological safety. Because of this, we seldom ‘look behind the curtain’ to see how the scales we are using were initially developed.

So, what happens when bias is ‘baked into’ an existing measurement scale?

An Example of the Measurement Scale Problem

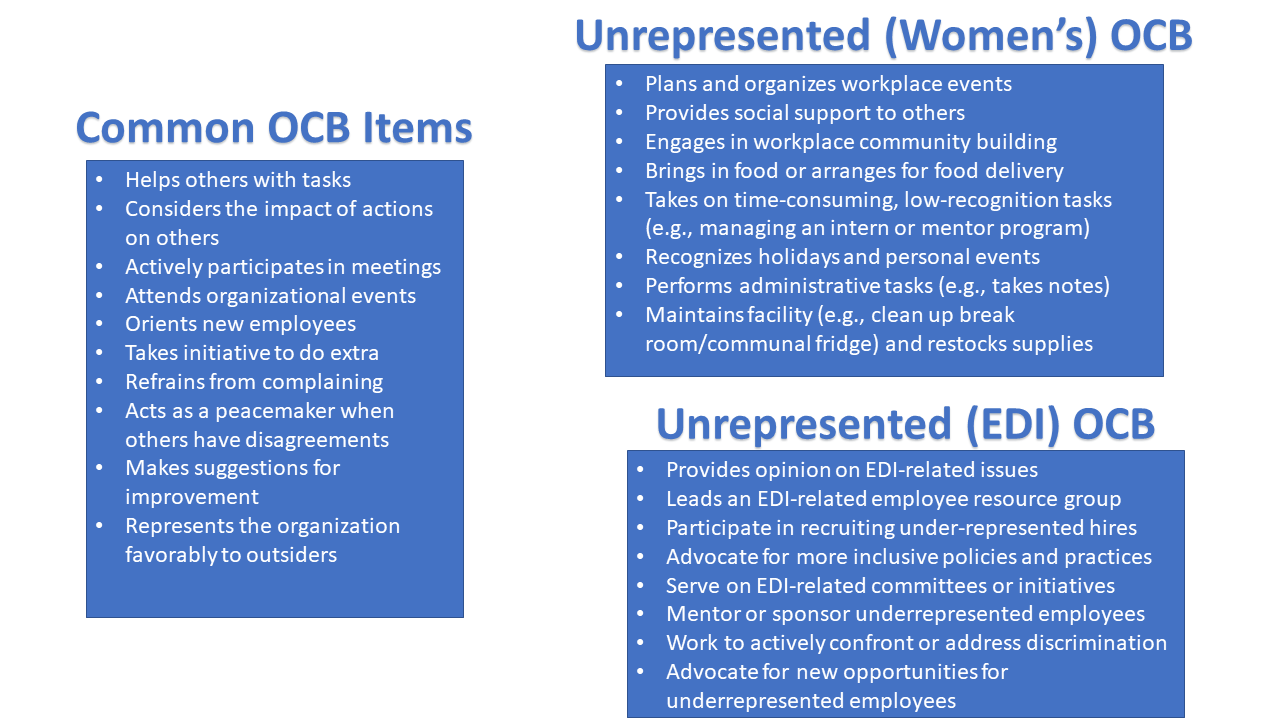

One of my main research areas is organizational citizenship behavior (i.e., OCB) – the ‘extra’ helping behaviors that many employees engage in at work (e.g., passing on information, sharing expertise, socializing new employees, listening to coworkers’ concerns and issues, attending workplace events).vi vii There is a great deal of interest in this construct because these behaviors make groups and organizations more effective, successful and profitable.viii

Interestingly, most studies find no sex differences in OCB. In fact, two comprehensive, systematic reviews of the OCB literature confirm that men and women (seem to) exhibit similar levels of OCB.ix x This is despite the fact that there is a clear theoretical link between these types of behaviors and pervasive societal expectations that women should be helpful, caring and concerned with others’ outcomes.xi xii This (lack of) finding had always confounded and frustrated me. It also had a stifling effect on research on sex differences in OCB. Although many researchers anecdotally saw that women engaged in more OCB than men, these casual observations were not supported empirically. Basically, the narrative was … if the research tells us there aren’t any sex differences in OCB, then there really isn’t anything to study, is there?

It was only recently that a colleague and I pointed out a potential explanation for the lack of sex differences in OCB. Namely, that OCB measurement scales are ‘gendered’ – that is, the most commonly-used OCB scales were primarily developed on men and emphasize the citizenship behaviors in which men (rather than women) engagexiii (see the full article and sex composition of the scales here). In some cases, the samples were 80-97% male.xiv xv xvi xvii This means that women’s OCB is not represented in the measurement scales generally used to assess OCB – and is therefore missing from the findings and insights resulting from studies using these scales. Therefore, it is not surprising that meta-analytic reviews failed to detect sex differences.

The result is decades of potentially flawed research that likely provides an inaccurate and skewed picture of how women and men contribute to organizations. This research also fails to capture the negative consequences for women, such as work-family conflictxviii and poorer career outcomes.xix xx Further, the consistent lack of sex differences suggests that women do all of the OCB that men do (i.e., the task-related behaviors represented in the scales) – plus the types of non-task-related OCB mostly engaged in by women (i.e., the behaviors not represented in the scales, such as planning and organizing workplace events, providing social support and engaging in time-consuming, low-recognition tasks). The results we get from studies using these scales are not accurate because “… the problem is not that of searching for a needle in the haystack, but that of searching for a needle that is not in the haystack”xxi (p. 97, emphasis added).

Measures (and Measurement) Can Be a Way of Seeing … and A Way of Not Seeing

Measures can focus us, but they can also be a way to blind us to what is there but is unseen (i.e., unmeasured). If a measurement scale did not include women in its development, there is a pretty good chance it did not include women of color and, by extension, people of color. Depending on what the scale measures and the research question being investigated, there may be significant implications for EDI work if the measurement scales themselves are biased.

With regard to OCB, only one of the OCB scales examined mentioned race demographics, with samples comprised of 83-95% Caucasians.xxii It therefore seems fairly clear that EDI-related citizenship behaviors (e.g., participating in recruiting diverse candidates; serving on diversity-related committees or initiatives; advocating for more inclusive policies and practices; being consulted on EDI-related issues) are not captured in any current OCB scale because the scales were not developed on diverse and representative samples. This means that – in the academic literature and in organizations – these types of helpful actions get ‘disappeared’xxiii and, in a way, the people who do them also get disappeared.

Some Questions to Ask Yourself About the Measurement Scale(s) You Plan to Use

To be clear, the issues I am raising are not specific to OCB measures. They are also relevant to other types of surveys and assessments (e.g., personality profiles, organizational culture, leadership development) that are commonly used. Thus, if you use such surveys or assessments – especially those used to make high-stakes decisions (e.g., hiring, promotion, fast-tracking employees) – it is important to be knowledgeable about how such instruments were developed. For instance, if leadership measures were largely developed on men, research using these scales may unintentionally be promoting a masculine model of leadership – which disadvantages any individual who does not ‘fit’ into that model.

Developing a measurement scale is a lengthy and time-consuming process (and one that is never fully complete). If you are less familiar with how measurement scales should be developed, educate yourself on the basic steps and guidelines. Some good places to start include DeVellis and Thorpe’s scale development bookxxiv and various articles on the scale development process.xxv xxvi For high-stakes assessments or broader organizational initiatives using such measures, it is important to understand their background and the history of the development process.

Prior to using a measurement scale, do some digging and do your due diligence. In addition to examining the standard scale development processes, here are some additional questions to ask:

- What do I really know about the measurement scale I am using?

- Is there a technical report available for the scale I am considering?

- Do I understand how it was developed and on whom it was developed?

- Who was left out or not represented?

- What experiences are missing or not (mis)represented?

- In what ways is the development context similar or different from my intended use?

- What underlying assumptions were made in the scale development process?

Where Do We Go From Here?

As social scientists, we are embedded within a larger disciplinary paradigmxxvii in which there is consensus about measurement and research methods. Many commonly-used measurement scales were developed years, if not decades, ago – when issues of racism, sexism and other -isms were less salient. We must be vigilant and pay attention to the scales that we (and others) use in order to improve the foundations upon which science is built. Just as Shelley Taylor made new discoveries when she realized that women (and their responses to stress) were missing from mainstream research, we must be cognizant of the dangers of using biased scales.

To be sure, we do not all have the luxury of time and resources to develop our own scales. Sometimes we need to adopt the attitude of ‘good enough is good enough.’ If there is only one scale available to you and you know there are problems with how it was developed, point this out as a specific limitation to raise awareness so that other researchers can work to address any issues.

In addition to examining the measurement scales that you use, do the same for others. If you are conducting journal or conference paper reviews, pose such questions in your review to the authors. You do not necessarily have to do the work of investigating the origins of the scales they used, but do ask them questions about scale development and encourage scrutiny of the measures. Depending on what they find, they may need to include additional limitations in their work with subsequent implications for generalizability.

If enough of us start to be alert to these issues, the field will become more aware, and things will start to change. There are no perfect measurement scales – but we at least need to be upfront about the flaws that we find and how they may limit or contextualize the conclusions we draw.

Concluding Thoughts

What I learned from my experience with the OCB scales is to trust my intuition. If you read a research study and it does not fit with your experience or what you see in the world around you, find out why. Do not blindly accept research results (or use measurement scales) without understanding their development. Just because something is published in a good journal does not mean that it captures the whole story. Often, going down these rabbit holes leads one to new and interesting questions and future research directions. Even though research on OCB began in the 1980’s and there are literally thousands of studies on the topic, it is still an open question as to whether or not women engage in more OCB than men. The problem of flawed OCB scales persists. We cannot rely on analyses to produce meaningful results if the scales we use are problematic – either in how they were developed or in terms of what samples on which they are being used. The onus is on all of us to make the field better and ensure that we are not contributing research that has ‘baked-in bias.’

i Cannon, W. B. (1932). The wisdom of the body. New York: Norton.

ii Taylor, S. E. 2012. Tend and befriend theory. In P. A. M. Van Lange, A. W. Kruglanski & E. T. Higgins (Eds.), Handbook of theories of social psychology, pp. 32-49. Thousand Oaks, CA: Sage Publications.

iii Taylor, S. E., Cousino Klein, L., Lewis, B. P., Gruenewald, T. L., Gurung, R. A. R. & Updegraff, J. A. (2000). Biobehavioral responses to stress in females: Tend-and-befriend, not fight or flight. Psychological Review, 107(3), 411-429.

iv APA Dictionary of Psychology

v Detert, J. R., & Burris, E. R. (2007). Leadership behavior and employee voice: Is the door really open? Academy of Management Journal, 50, 869-884.

vi Organ, D. W. (1988). Organizational citizenship behavior: The good soldier syndrome. Lexington Books.

vii Smith, C. A., Organ, D. W. & Near, J. P. (1983). Organizational citizenship behavior: Its nature and antecedents. Journal of Applied Psychology, 68, 653-663.

viii MacKenzie, S. B., Podsakoff, N. P., & Podsakoff, P. M. (2018). Individual- and organizational-level consequences of organizational citizenship behaviors. In P. M. Podsakoff, S. B. MacKenzie & N. P. Podsakoff (Eds.), Oxford handbook of organizational citizenship behavior (pp. 105-147). Oxford University Press.

ix Ng, T. W. H., Lam, S. S. K., & Feldman, D. C. (2016). Organizational citizenship behavior and counterproductive work behavior: Do males and females differ? Journal of Vocational Behavior, 93, 11-32.

x Organ, D. W., & Ryan, K. (1995). A meta-analytic review of attitudinal and dispositional predictors of organizational citizenship behavior. Personnel Psychology, 48, 775-802.

xi Eagly, A. H. (1987). Sex differences in social behavior: A social-role interpretation. Erlbaum.

xii Eagly, A. H., & Wood, W. (2012). Social role theory. In P. A. M. van Lange, A. W. Kruglanski, E. T. Higgins (Eds.), Handbook of theories in social psychology (pp. 458-476). Sage Publications.

xiii Bergeron, D. M. & Rochford, K. (2022). Good soldiers versus organizational wives: Does anyone (besides us) care that OCB scales are gendered and mostly measure men’s – but not women’s OCB? Group & Organization Management. doi.org/10.1177/10596011221094421

xiv Motowidlo, S. J., & Van Scotter, J. R. (1994). Evidence that task performance should be distinguished from contextual performance. Journal of Applied Psychology, 79, 475-480.

xv Podsakoff, P. M., & MacKenzie, S. B. (1994). Organizational citizenship behaviors and sales unit effectiveness. Journal of Marketing Research, 31, 351-363.

xvi Podsakoff, P. M., MacKenzie, S. B., Moorman, R. H., & Fetter, R. (1990). Transformational leader behaviors and their effects on followers’ trust in leader, satisfaction, and organizational citizenship behaviors. The Leadership Quarterly, 1, 107-142.

xvii Van Scotter, J. R., & Motowidlo, S. J. (1996). Interpersonal facilitation and job dedication as separate facets of contextual performance. Journal of Applied Psychology, 81, 525-531.

xviii Bolino, M. C., Flores, M. L., Kelemen, T. K. & Bisel, R. S. (2022). May I please go the extra mile? Citizenship communication strategies and their effect on individual initiative OCB, work-family conflict, and partner satisfaction. Academy of Management Journal. doi.org/10.5465/amj.2020.0581

xix Bergeron, D. M., Ostroff, C., Schroeder, T. & Block, C. J. (2014). The dual effects of organizational citizenship behavior: Relationships to research productivity and career outcomes in academe. Human Performance, 27, 99-128.

xx Bergeron, D. M., Shipp, A. J., Rosen, B., & Furst, S. (2013). Organizational citizenship behavior and career outcomes: The cost of being a “good citizen.” Journal of Management, 39, 958-984.

xxi Nunnally. J. C (1967) Psychometric theory. McGraw-Hill.

xxii Maynes, T. D., & Podsakoff, P. M. (2014). Speaking more broadly: An examination of the nature, antecedents, and consequences of an expanded set of employee voice behaviors. Journal of Applied Psychology, 99, 87-112.

xxiii Fletcher, J. K. (1999). Disappearing acts: Gender, power and relational practice at work. Cambridge, MA: MIT Press.

xxiv DeVellis, R. F. & Thorpe, C. T. (2022). Scale development: Theory and applications (5th edition). Thousand Oaks, CA: Sage Publications.

xxv Hinkin, T. R. (1995). A review of scale development practices in the study of organizations. Journal of Management, 21, 967-988.

xxvi Hinkin, T. R. (1998). A brief tutorial on the development of measures for use in survey questionnaires. Organizational Research Methods, 1, 104-121.

xxvii Pfeffer, J. (1993). Barriers to the advance of organizational science: Paradigm development as a dependent variable. Academy of Management Review, 18, 599-620.