By Michelle Schneider & Jayke Hamill

Over the last several years, there has been a growing movement within the social sector and the field of evaluation to better listen to and elevate the voices of the people served through gathering feedback about their experiences receiving services or training. 1 2 3 Additionally, as the social sector moves toward greater equity, diversity and inclusion practices, leaders are also finding greater value in listening to the people they serve and using feedback as an inclusive driver that has the potential to disrupt outdated programs and evaluation practices that may reinforce systematic inequities.4 5

Listening is one of the most essential skills that leaders need to navigate today’s complex and uncertain times. We train thousands of leaders every year on how to have better conversations and build trust by being a better active listener. But, we don’t stop at training others to actively listen. We’re practicing it too, with our colleagues, our teams, across functions, and just as important – with the participants we serve.

Human-centered design is at the core of our trainings, creating authentic experiences where participants feel recognized and valued. We also try to take a human-centered approach in our evaluation work as well. We believe that one key approach to better understanding why a training program works is to listen and learn from the participants who are participating in the training.

Case and Point: The Ability Leadership Project – North Carolina (ALP-NC)

In 2018, Center for Creative Leadership (CCL)® partnered with Disability Rights North Carolina, and funder North Carolina Council on Developmental Disabilities to develop and deliver an inclusive, state-wide disability advocacy leadership development training and a plan for evaluation. After a year of deeply listening to individuals from across the disability advocacy community, learning about programs, identifying challenges and barriers to accessing training, CCL and its partners designed and launched a first-of-its kind leadership training. The program brings together people with intellectual and other developmental disabilities (I/DD) and people without I/DD, who are often family members, professionals and allies of those with I/DD.

Individuals with I/DD have to navigate within systems that often fail to listen to their needs and lived experiences. A core part of the program design was to build and prioritize their voices, experiences, and stories as powerful instruments for influence and change. Similarly, we wanted our evaluation practices to match the intent of the design, intentionally allowing opportunities for feedback from participants in this pilot program that would provide ongoing program improvement.

We systematically and regularly asked participants for feedback during the pilot training, including what we were doing well and how we could improve. As a result, we have been able to use data to make real-time changes to the content and process to meet the needs of the participants. This has also allowed us to plan for changes to delivery and evaluation in future cohorts.

While we’re still working on our final evaluation report, we offer some key lessons learned during this pilot with our efforts to be intentional about listening and learning from program participants:

1) Create an evaluation partnership built on collaboration.

Collecting participant feedback, or other evaluation data, often falls to a single individual to make sure it happens – and often at the end of a program.

From the start of this project, our evaluation staff were valued as partners and invited to planning sessions with trainers and funders to learn and ask questions. As a result, we collectively identified opportunities to collect data at the start of, during, and at the end of training. Not only did our involvement help clarify the design, but it also allowed evaluation staff to better understand the context in which the training was being offered. Additionally, it helped built trust between us as stakeholders, and positioned the data as valuable and critical to continuous improvement.

2) Clarify the purpose of collecting feedback.

Research has shown that one of the biggest challenges in using participant feedback effectively is that its purpose and how it will be used is often not discussed among stakeholders. This often results in feedback being collected more for funder and performance purposes rather than for learning or accountability to participants.6

Due to the pilot nature of the program, our internal team, as well as the client and the funder, were very clear that participant feedback would serve two purposes: help us understand the impact of the program and help us learn what was working about the program and what we might need to change in real-time. Being clear on purpose (learning) prevented us from making assumptions and reminded us that making time to review and discuss the participant data was a foundational priority.

3) Build time for feedback into the program schedule, not after the fact.

While it never seems like there’s enough time to deliver all of the content, we prioritized participant voices by building in time to complete surveys or participate in a focus group into the training day schedule. Doing this allowed us to achieve a near perfect response rate after every session, ensuring more diverse and consistent feedback. In addition, it didn’t burden participants to have to complete something else after or in-between the trainings, and it didn’t position evaluation as an after-thought.

4) Prioritize accessibility and use multiple feedback tools and formats.

Given the participants we were serving, accessibility was top of mind for us. We used a mix of feedback mechanisms, including surveys toward the end of each session that included likert-scale questions, as well as open-ended questions where participants were free to express themselves in their own words. We also hosted a virtual focus group at the end of the program, during which we quickly realized that some people were less inclined than others to share verbal feedback. In response, we encouraged the use of the chat function as another way to offer feedback, which made a significant impact on some participants’ ability to contribute. Some of the deepest impacts, as well as suggestions for improvements were shared through the chat feature made available through our digital training platform.

5) Formally reflect on results.

Research has shown that nonprofits collect data from participants but often they don’t have the time or capacity to reflect and learn from the data.2 6 Not only is this not useful but it could damage relationships with participants to ask them to provide their perspective and then not learn from or apply it.

As part of this training, we instituted a formal process to reflect on participant feedback after each session so it stayed front-of-mind. After each session, an After Action Review (AAR) was scheduled with the client and the funder. In preparation for those meetings, participant feedback was aggregated into reports, which resulted in rich dialogue that frequently led to training content adaptations, new ideas, and the possibility of significant structural changes that may take place in future sessions or iterations of the program model.

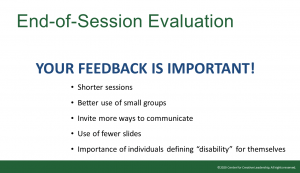

6) Let participants know their feedback made a difference, and in what way.

One of the biggest criticisms around participant feedback is that nonprofits never “close the feedback loop,” asking the people they serve for their time and perspectives, and then never report back as to how their feedback was used. We closed the loop by being transparent with participants about how we reviewed their feedback in detail (though in aggregate and de-identified) and made changes to the sessions as a result. Figure 1 shows an example of the information we shared with participants. As a result, we noticed a marked increase in session and overall feedback. The quote is from a participant who expressed gratitude in the following session survey for revisiting a discussion about the definition of disability, which participants identified as an area for improvement in a previous session.

Figure 1

7) Leverage participant data to more deeply explore perspectives.

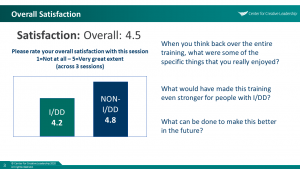

When we analyzed participant survey data, we noticed participants with I/DD consistently rated session satisfaction lower than those without I/DD. Rather than draw our own conclusions as to why that might be, we brought the data back to the participants with targeted questions as part of a focus group. Figure 2 shows an example of how we used participant survey data to frame our focus group dialogue. As a result, we were able to better understand participant feedback, to think about the design for the next cohort, and to share back participant data.

Figure 2

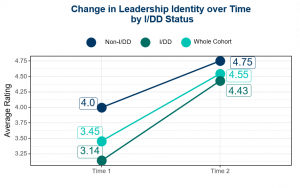

We’re using results to begin planning for the next cohort. Being intentional about participant feedback does require commitment and additional effort by trainers, partners and evaluation staff. Although we are early in this journey, it’s been worth the time and investment in terms of strengthening participants’ connection to the program. At least half of participants have enrolled in our optional second year, in which they will be trained to become future trainers for other disability rights advocates. This is not surprising as data emerging from our initial baseline and endpoint surveys show that while a small sample size, all participants’ scores showed increases in their perceptions of themselves as leaders in the community, with the most notable increases in by people with I/DD. Figure 3 shows changes in leadership identity from Time 1 to Time 2 based on I/DD status.

Figure 3

We are also proud to share that our program satisfaction scores for the completion of our initial pilot program were high, as well ratings on sense of pride in being part of the ALP-NC inaugural cohort and being able to make meaningful connections with fellow cohort members. Although too soon to tell, we are curious about how these meaningful connections will continue to serve these participants as they continue to grow as advocate leaders and trainers through this project. We hope our own ability to model intentional listening in our practice as designers and evaluators helped reinforce what advocacy and reciprocity can look like in real time.

We are also proud to share that our program satisfaction scores for the completion of our initial pilot program were high, as well ratings on sense of pride in being part of the ALP-NC inaugural cohort and being able to make meaningful connections with fellow cohort members. Although too soon to tell, we are curious about how these meaningful connections will continue to serve these participants as they continue to grow as advocate leaders and trainers through this project. We hope our own ability to model intentional listening in our practice as designers and evaluators helped reinforce what advocacy and reciprocity can look like in real time.

References

1Also referred to as Constituency Voice, Beneficiary Feedback, Feedback Loops

2Threlfall, V. & Gulley, K. (2019). What Social Sector Leaders Think About Feedback. Stanford Social Innovation Review, 11(2), 40-45..

3Twersky, F., Buchanan, P., & Threlfall, V. (2013). Listening to those who matter most, the beneficiaries. Stanford Social Innovation Review, 11(2), 40-45.

4Campbell, M. & Lake, B. (2019). Equity and the Global Feedback Movement. Center for Effective Philanthropy. https://cep.org/equity-and-the-global-feedback-movement/

5Buteau, E., Chaffin, M., & Gopal, R. (2014). Transparency, Performance Assessment, and Awareness of Nonprofits’ Challenges: Are Foundations and Nonprofits Seeing Eye to Eye? The Foundation Review, 6(2), 7.

6Bryan, T. K., Robichau, R. W., & L’Esperance, G. E. (2020). Conducting and utilizing evaluation for multiple accountabilities: A study of nonprofit evaluation capacities. Nonprofit Management and Leadership.

Special thanks to Tim Leisman, our former colleague, who was the initial evaluation partner on this project. His insights and passion for this work helped lay the foundation for strong pilot training year centered on the participant experience.